“Trust” in the human-machine collaboration holds the key to full exploration and use of Artificial Intelligence (AI) and Autonomy for military purposes.

“How do you think the Mark One chest piece is going to hold up? The suit’s at 48% power and falling, Sir.” Iron-Man’s Tony Stark trusted Jarvis with his most important missions and the machine never let him down. Well, that kind of interaction between humans and machines in a real conflict zone may not be too distant.

Scientists at the Defence Research and Development Canada (DRDC), within the Department of National Defence (DND), have made a breakthrough in Artificial Intelligence (AI) and Autonomy by developing a practical design model that can enable trust in partnering with these technologies. Dr. Ming Hou, Dr. Geoffrey Ho, and Maj. David Dunwoody in their research paper IMPACTS: A Trust Model for Human-Autonomy Teaming explain critical parameters against which stakeholders in academia and industry could develop an autonomous mechanism’s trustworthiness.

“The IMPACTS model is aimed to guide the construct of user’s trust in autonomy when designing a human-agent [AI or intelligent machine] partnership and addressing the three context constraints (i.e., human, technology, and environment) as well as six trust barriers [machine’s lack of human sensing, thinking, flexibility, transparency, understanding, effective interface, and human’s lack of understanding in designing lifelong learning and adapting machines]…,” the research paper reads.

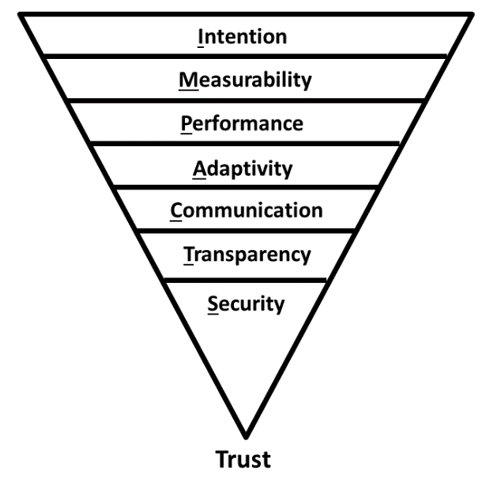

IMPACTS is an acronym for Intention, Measurability, Performance, Adaptivity, Communication, Transparency, and Security. A promising tool that guides designers in translating an abstract concept of trust into a concrete system behavior might be a harbinger of breaking the dawn of optimism in AI and Autonomy technologies.

Necessity for a trust model

AI and Autonomy are already among us in a variety of forms and some of these forms might not be obvious. While most of us know about Amazon Echo and Netflix, some might not be aware that even Microsoft 365 and Microsoft Office are AI-powered (Office Intelligent Services). The rush to bring on the road self-driving cars or home-use robots are instances of individual-level applications. The mass-level impact examples would be those where humans use this technology for mass audiences and industrial purposes in the civil domain or safety and security purposes in the military domain. Irrespective of the level, these technologies touch upon a significant portion of the human population, if not all. On a battlefield, these are already the deciding component.

“Autonomy has been singled out as a key component of the third offset strategy for military applications, which intends to deliver leap-ahead battlefield technologies such as the robotic wingman concept that team up soldiers with autonomous systems to enhance military capability,” the paper quotes from US Department of Defense report. Think of fighting drones or robotic soldiers that can take enemy fire and fight along with their human counterparts.

Trust is not an easy or straightforward concept. How to build trust from a conceptual level or a psychological state into a practically meaningful design is a challenge. The DRDC scientists have been developing insights and researching human-machine interactions for more than two decades and effectively underscored this abstract concept of trust between humans and machines (both hardware and software). The paper on the IMPACTS model is built upon the research of Senior Defence Scientist Dr. Hou, a recipient of the prestigious DRDC Science and Technology Award, where he suggests that to understand the complexity of trust between human and machine, the trust model “should be built up based on aspects of human-human interactions.”

The power of the IMPACTS model is rooted in its ability to keep intelligent adaptive systems (IASs) such as AI-enabled autonomous systems secure against any malicious or non-state actors and keep its actions within moral, ethical, and legal parameters. The topic of IASs is covered in extensive detail in the book Intelligent Adaptive Systems: An Interaction-Centered Design Perspective.

How is the IMPACTS Model different from other efforts in the arena?

IMPACTS’s strength lies in the capability and integrity effectively built in IASs, the two pillars of trust, of AI-powered or autonomous systems when working alongside humans. While the performance capability of machines is undoubtedly envious, the authors identify that the integrity factor demands some thought and investigation in the measurement and enhancement factors. Carefully considering all known limitations and strengths from previous research, the model vouches for better human systems integration due to calibrated and assured trust in the partnership. “In some cases, they [machines] also decide which task is best suited to achieve the goal for themselves,” the paper elaborates.

What role does each component of the trust model IMPACTS play?

Intention: The intention here refers to the desires and those must be to support human partners, the paper specifies. Intention forms the first guideline for the trust model when developing IAS technologies. According to the scientists, understanding the machine’s intent will help humans delve into “its ethical and legal actions and develop trust in their ethical behavior.” The complexity of the phenomenon can be pointed from the fact that sometimes the machines may be allowed to perform negatively but within accepted parameters. Additionally, the paper clarifies that the scientists are mindful of the social etiquettes, too, of the machines for better integration.

Measurability: Trust sounds fragile, but it does not have to be like that it seems. Dr. Hou states in the paper, “You may not trust words or even question actions, but should not doubt patterns.” Remember that the measurability factor is all the more important in this context as the machines will be making decisions to determine a human’s life and death. The behavior, action, and pattern need to be measurable so that the intention can be deduced, confirms the research paper.

Performance. Trust in the reliability, consistency, and predictability of a machine’s performance is vital for the partnership. The scientists elaborate, “For trust to be gained, the agent must demonstrate performance that is reliable (consistency over time), valid (the agent performs as intended), dependable (low frequency of errors), and predictable (meeting human expectations).” The model rests on the stance that the machine’s performance should enhance the partnering human’s capabilities and should not be a hindrance or add to the human partner’s tasks.

READ: The world of Artificial Intelligence (September 6, 2020)

Adaptivity: According to the paper, this criterion of the machine has to be multidimensional to make it inherently smart. The focus is that the machine should quickly adapt to the context and situation and intelligently decide on its action to help the human partner. The level of adaptivity that the scientists are aiming for is quite reassuring: “Especially with dynamic changing environments and human mental states, the agent [machine] needs to have the capability to learn and understand its human partner’s intentions, the changes in the environment, the system status, monitor the human cognitive workload and performance, and guard the human resources and time, and then change its course of action to help the human achieve the team’s common goals,” the authors explain.

The scientists here are talking about a scenario where a machine is competent to decide in complex, dynamic situations “on behalf of its human partner.” They explain it with an example of a GPS that not only informs its user but also takes control of the vehicle in case, say for instance, the driver is driving rashly. The paper elaborates that this level of adaptivity in the design is necessary to achieve the desired level of trust between humans and machines.

Communication: It is a no brainer that trust thrives in an environment that ensures two-way communications between sender and receiver. The IMPACTS model takes this concept from humans to the human-machine interface (HMI) where the autonomous system is designed to think itself. To achieve this, however, the requisites should adequately fit in their respective slots. “HMI also needs to be flexible and offer the types of feedback its human partner would like so that effective communications happen at the right time, in the right format, through the right channel, and to the right recipient,” the paper underscores.

Transparency: Research corroborates that transparency increases trust between the parties. Transparency becomes all the more critical if humans and the autonomous system are at different locations. To be on the same page, the paper reads, humans should be able to know “agent intentions, reasoning, behaviors, and end states.” It says, “Transparency helps achieve this goal by providing display different levels of information with reduced visual complexity (e.g., density, grouping, format, layout) while providing sufficient details like task complexity (e.g., number of paths, number of possible end states), conflicting interdependencies, and uncertainty in linkages.” In this sense, AI or machine needs to be explainable about its intention, plan, behavior, and potential end states.

Security: Security is the last leg of the IMPACTS trust model. Security efforts take place behind the scenes in almost every situation. The paper stresses that to keep the malicious and non-state actors at bay, the human-machine system or IAS must be protected against intrusions and corruption. “Strong security measures are required to establish trust so that people can benefit from these systems,” the scientists explain. “Designers and organizations need to build confidence for autonomous systems and AI technologies by providing goal-directed explanations of how security measures are in place (i.e., at the right level of detail) to protect and ensure the performance of the system.”

IMPACTS is an important addition to the existing knowledge in human-machine teaming, AI, autonomy, weaponry, and national defense. The details of the IMPACTS model convey the complex and intricate building blocks that the scientists at the research agency have minutely researched. The core of the developments lies in ensuring “trust” practically before the human-machine collaboration is put to work for national security.

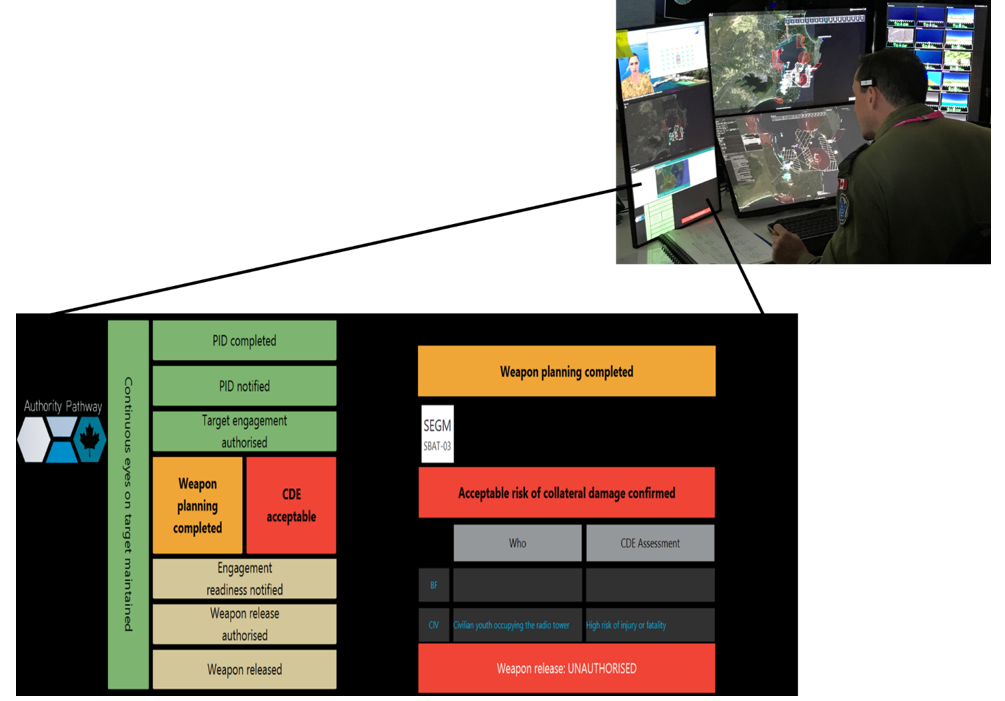

The model also provided guidance for designing an intelligent decision aid to address complex, lengthy, and error-prone target engagement process, Authority Pathway for Weapon Engagement (APWE). The APWE assisted an Unmanned Aircraft System (UAS) operator to follow rules of engagement and international laws of armed conflict in a large scale international joint exercise.

The IMPACTS model is impressive scientific progress as it provides systems designers a practical tool for guiding the development of effective human-autonomy teaming with enabled, assured, and calibrated trust. This will propel the existing technology and trigger future innovations for an easier embracing of AI and Autonomy technologies for both civil and military purposes. While Tony Stark believed in running before walking, the scientists at the DRDC have taken a more thorough and practical approach to the human-intelligent systems integration.